This page is dedicated to documenting what was achieved during each of the lab sessions within the emerging technologies module. I shall attempt to document each week in detail to the best of my ability in recalling what progress was able to be done during those sessions.

Since the hand in for this initial assignment requests 3 blogs I will link the other 2 here.

Current progress on researching/development for my own – Emerging Technologies (662110_A23_T1) development portfolio – Bloggers Unite (hulldesign.co.uk)

Researching on the different types of immersive technology – Week 2 immersive technology research – Bloggers Unite (hulldesign.co.uk)

Week 1 (October 5th)

For our first assignment within the lab sessions, we were tasked with doing a simple render of a moving scene within Maya, consisting of a moving scene that lasts for no longer than 10 seconds. This initial process would be done within Maya 2022 on my laptop, and would be made using a copy of my environment from my 3D character animation course last year. I decided to reuse the environment since I figured it would be a lot more convenient reusing an environment I had already made, rather than having to make an entirely new one.

Setting up the VR camera was fairly easy with the provided instructions, though I encountered a roadblock when it came to the rendering process, as due to the lap top that I currently use not having the adequate power to properly render the sequence (well, it would do maybe 1 or 2 frames before maya crashed), I had to use one of the PC’s within the DIAM Building in order to render the whole sequence, before porting over the renders back onto the laptop for me to edit into a VR video.

Though due to time constraints, I did have to cut the rendering short, though it managed to make a total of 218 frames out of the 240 in total the animation consisted of, though despite this, 218 would be more than enough to make the video work.

After putting the video together, the video would then be uploaded to youtube, though it would unfortunately end up being automatically set and locked to being a short (which forced the video to be in Portrait mode and not landscape, making it rather inconvenient), I wasn’t able to find any form of setting to change it to a normal video format, so I left it as it was.

Whilst the video was set to render on the computers, I decided to get to work on the second task that was handed to us for this week, which was to both experiment with the MASH system in Maya (yes its spelt in all caps), as well as creating a handful of simple effects as to demonstrate our understanding in using the software.

Again the lab session requested for a render, which was not exactly possible, hence why I decided to come up with the next best solution, by using OBS (Open Broadcasting Software) to record and demonstrate my skills in what I was capable of doing with the MASH tools provided to me. Whilst my initial attempts with the MASH tools were rather primitive, I believe I was still able to achieve something of some value with what I was able to create.

Week 2 – Research into immersive tech (October 12th)

For week 2, we were given the task of researching and obtaining an understanding of each of the 3 different types of immersive technology from a research based perspective, those being Virtual Reality, Augmented Realty and Mixed/extended reality.

Given the extended nature of this topic and how much research is needed in order to thoroughly cover the topic in question, I shall make a separate document for all of the information regarding it, as the level of detail is rather extensive. This document has been

As for the actual lab session itself, we were tasked with messing around with FrameVR. An online platform where users can create virtual experiences without the need for any form of experience within coding or programming, making it have a rather low entry barrier for users who were relatively new to the whole concept of VR.

I wasn’t sure as to what theme I should go with, so I decided to just pick one at random and decided to play around with the tools I had. The frame in question was nothing of much note, being just a few objects thrown about, along with setting up a trigger event when you come into contact with the portal at the far back of the area.

Link to the frame – Frame (framevr.io)

Week 3 (October 19th)

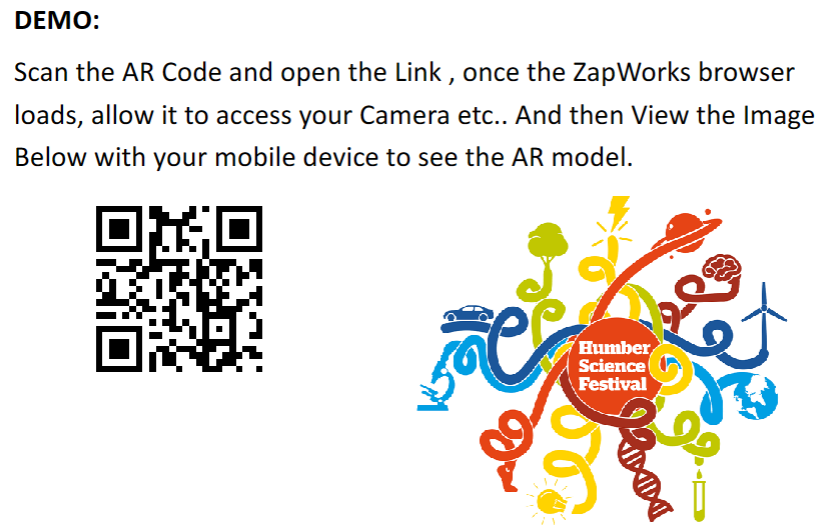

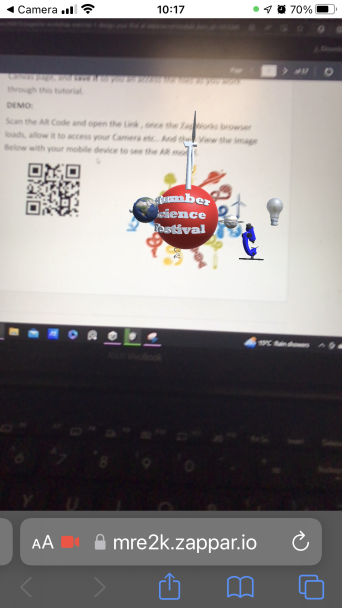

Week 3’s workshop was based around performing an AR exercise using Zapworks, a program that the university of Hull used in the past in order to create AR like experiences for one of their events, which was shown to us on the workshop for this week in the form of a code for us to scan.

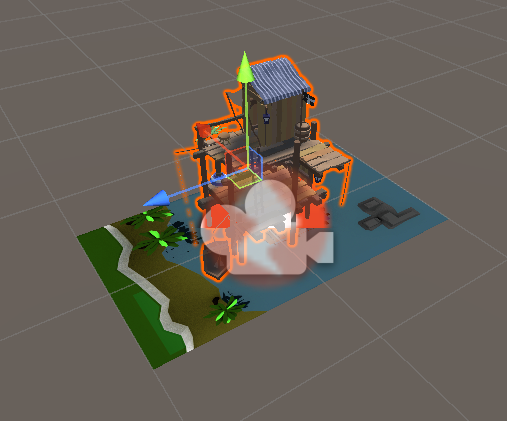

Which when the user then scans the qr code via a mobile phone (or really, any device capable of scanning a QR code), should produce results similar to the image below, where a 3D graphic pops out that the user can look at through their device.

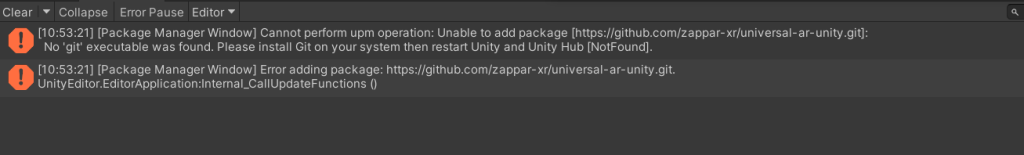

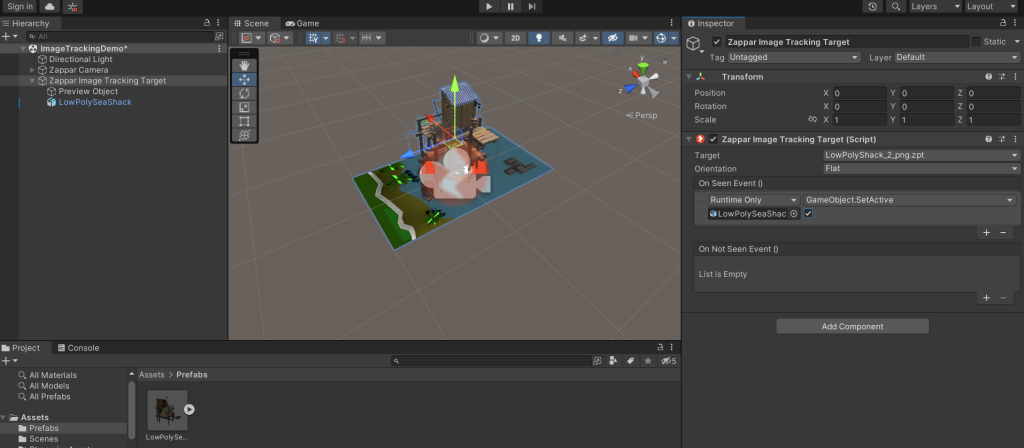

We were tasked with creating one of our own through the use of Unity in a WebGL form. I did have to have assistance from a tutor in order to install the proper software required (since the laptop I used was not properly equipped, and installing it proved to be a bit of a pain).

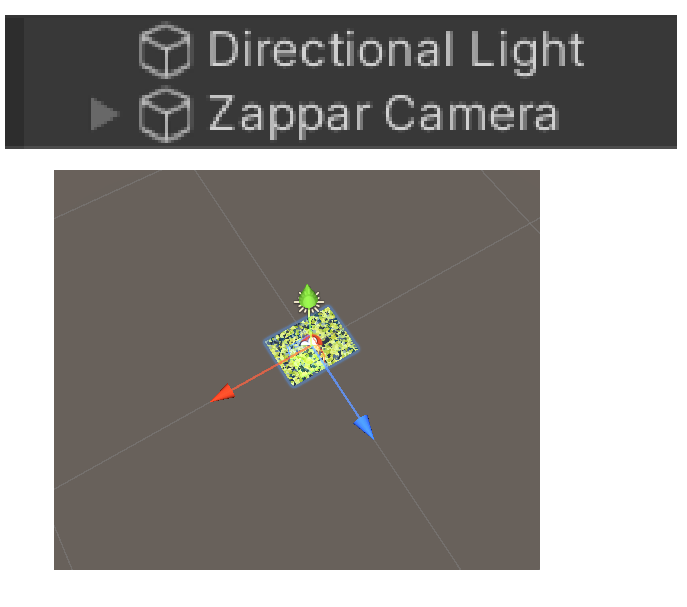

But eventually, I got the thing installed and working accordingly, so progress can begin. I followed the instructions provided to us on canvas, in setting up a zapper camera, along with an image for it to track from the menu.

Then with the instructions provided, I was to use the zapper image trainer with the provided png image, and then place the provided model on top of the image.

I then attached the model to the image tracker and then assigned it various functions through the inspector. This would allow the object to respond and be shown when it detects that it is being looked at.

Emerging Technology week 4 – VR Art session

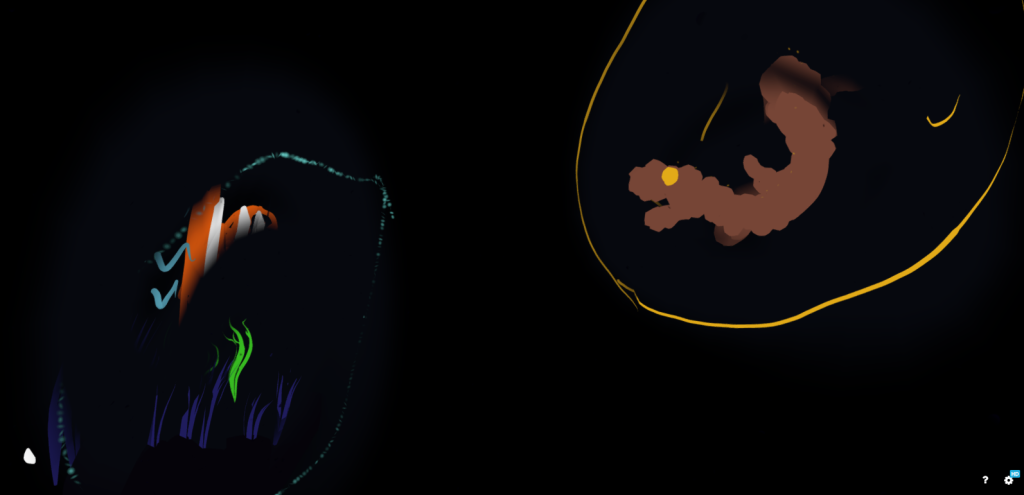

Week 4 of the emerging technologies course had us messing about with VR Headsets to create something using open brush in the form of a group exercise.

The task was to create a diorama art piece within the program. My use with the VR headset was limited and kept to a few minutes at a time, mainly due to me having a bit of VR sickness, despite only wearing it for about 10 minutes at a time. This admittedly made learning the program open sketch rather difficult with the constant breaks.

Me and Kier who I was assigned to work with, agreed on a simple scene underwater with a few pieces of aquatic life within a dark setting. We took turns back and forth in adding in each of our own piece, though with the aforementioned sickness earlier, he contributed more than what I did, with him adding in the fish, jelly fish, and the eel. I contributed the bubble effects, the little aura’s around each of what Kier did, and added in a small volcano towards the bottom corner as a more background like object.

The two of us continued to work together in creating the environment until the end of the session, when it came time to sending the project file onto sketchfab for it to be used by us both later for our word press blog. Keir saved it to his sketchfab account, and gave me a link in order for me to view it and take a few screenshots of what we had managed to achieve in the time we had to make the environment within class.

Overall, whilst I do feel like progress on the environment that we had created was partially limited by my inability to stay in VR for no longer than 10/15 minutes at a time, I still felt like I had managed to accomplish a substantial amount of progress in the allocated time that we had.

Emerging technology week 5 – Ethical concerns

When it comes to creating any form of media that users can experience/interact with, it is important that ethical concerns are taken into consideration, especially when it comes to the like of topics that are highly sensitive (ie, tragic events, private information, etc), especially if the form of media is anticipated to reach a wide audience range.

It is critical that proper consideration and thorough examination of the content planned to be showcased is checked, since it could end up provoking an unintended reaction that may end up sparking a negative response from users of the product.

Ethical concerns come in many different categories, ranging from what is usually expected, to sometimes very specific, it almost entirely depends on what the content is that ethical concerns are then discussed.

Even for example, if the content in question was of a news app that showed stories based on the user’s interests, it would have to consider what the user liked and disliked, the credibility of those articles, the sources of said articles, so on and so forth.

Leave a Reply